Multi-Tenant Modern App

Onboarding, Identity, and a Modern Client

Now that we have a fully deployed and functional monolith, it’s time to start looking at what it will take to move this monolith to a multi-tenant, modern architecture. The first step in that process is to introduce a way to have tenants onboard to your system. This means moving away from the simply login mechanism we had with our monolith and introducing a way for tenants to follow an automated process that will allow us to sign-up and register as many tenants as we want. As part of this process, we’ll also be introducing a mechanism for managing tenant identities. Finally, we’ll also create a new client experience that extracts the client from our server-side web/app tier and moves it to S3 as a modern React application.

It’s important to note that this workshop does not dive deep into the specific on onboarding and identity. These topics could consume an entire workshop and we recommend that you leverage our other content on these topics to fill in the details. The same is true for the React client. Generally, there’s aren’t many multi-tenant migration considerations that change your approach to building and hosting a React application on AWS. There a plenty of examples and resources that cover that topic. Our focus for this workshop is more on the microservices pieces of the migration and the strategies we’ll employ with our onboarding automation to allow us to cutover gracefully from single-tenant to multi-tenant microservices.

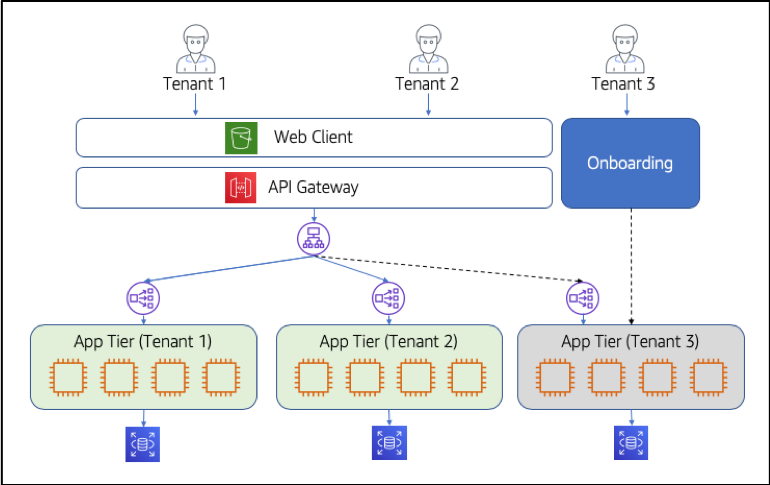

Our goal here, then, we’ll be to intentionally gloss over some of the details of onboarding, identity, and our new client and get into how our automated onboarding will orchestrate the creation of tenant resource. A conceptual view of the architecture would be as follows:

Here you’ll see that we now support a separate application tier for each tenant in your system, each of which has its own storage. This allows us to leave portions of our code in our monolith while still operating in a multi-tenant fashion. In this example, Tenant 1 and Tenant 2 are presumed to have already onboarded. Our new S3-based application continues to interact with these tenant deployments via the API Gateway. The challenge here is that we needed to introduce some notion of routing that would allow us to distribute load across each of the tenant clusters. This is achieved via an Application Load Balancer (ALB) that inspects the headers of incoming requests, and routes the traffic to the Network Load Balancers (NLB) that sits in front of each of these clusters.

Now, as new tenants are added to the system, we must provision a new instance of the application tier for this tenant (shown as Tenant 3). This automated process will both provision the new tier and configure the rules of the ALB to route traffic for Tenant 3 to this cluster.

Our goals for this lab are to enable this new onboarding automation and register some tenants to verify that the new resources are being allocated as we need. Again, the goal here is to only highlight this step and stay out of the weeds of the identity and underlying orchestration that enables this process.

What You’ll Be Building

Before we can start to dig into the core of decomposing our system into services, we must introduce the notion of tenancy into our environment. While it’s tempting to focus on building new Lambda functions first, we have to start by creating the mechanisms that will be core to creating new tenant, authenticating them, and connecting their tenant context to them for this experience.

A key part of this lab is building out an onboarding mechanism that will establish the foundation we’ll need to support the incremental migration of our system to a multi-tenant model. This means introducing automation that will orchestrate the creation of each new tenant silo and putting all the wiring in place to successfully route tenants, ideally without changing too much of our monolith to support this environment. As part of enabling this new multi-tenant onboarding, we’ll also extract the UI from the server and move it to a modern framework running in the browser. With this context as our backdrop, here are the core elements of this exercise:

- Prepare the new infrastructure that’s needed to enable the system to support separate silos for each tenant in the system. This will involve putting in new routing mechanisms that will leverage the tenant context we’re injecting and route each tenant to their respective infrastructure stack.

- Introduce onboarding and identity that will allow tenants to register, create a user identity in Cognito, and trigger the provisioning of a new stack for each tenant. This orchestration is at the core of enabling your first major step toward multi-tenancy, enabling you to introduce tenant context (as part of identity) and automation that configures and provisions the infrastructure to enable the system to run as a true siloed SaaS solution.

- Refactor the application code of our solution to move away from the MVC model we had in Lab 1 and shift to a completely REST-based API for our services. This means converting the controller we had into Lab 1 into an API and connecting that API to and API Gateway.

- Move from a monolith UI to a modern React UI, moving the code to an S3 bucket and enabling us to align with best practices for fully isolating the UI from the server. This is a key part of our migration story since it narrows the scope of what is built, deployed, and served from the application tier. It also set the stage for our service decomposition efforts.

- Dig into the weeds of how the UI connects to the application services via the API Gateway. We’ll find some broken code in our UI and reconnect it to the API Gateway to expose you to the new elements of our service integration.

- Use our new UI to onboard new tenants and exercise the onboarding process. The goal here is to illustrate how we will provision new users and tenants. A key piece of this will involve the provisioning of a new application tier (or monolith) for each tenant that onboards. This will allow us to have a SaaS system where each tenant is running in its own silo while appearing to be a multi-tenant system to tenants.

Once we complete these fundamental steps, we will have all the moving parts in place to begin to start migrating our monolith application tier to a series of serverless functions.